The Times They Are A-Changin'

Cogitations and ruminations on technologies past, present, and future.

December 13, 2023

I must admit that I’m starting to feel like an old fool, but where are we going to find one at this time of the day (these are the jokes The Muppet Show refused)? I was just recalling the classic The Times They Are A-Changin' song by Bob Dylan and thinking how true this sentiment is, especially when it comes to technology. I’m also reminded of the ancient Greek philosopher Heraclitus, who famously noted, “The only constant in life is change” (I’m not sure if this is repudiated or complemented by that philosopher of our times, Jon Bon Jovi, who observed, “The more things change the more they stay the same”).

As an aside, a few weeks ago I read Bob Dylan’s memoir, Chronicles: Volume One, which provides a deep and fascinating insight into his origin story. It was here I discovered that he was born Robert Allen Zimmerman—he selected his stage name as a combination of Bob, short for Robert, and Dylan after the Welsh poet and writer, Dylan Thomas. But we digress…

Also, I recently watched a brilliant film called The Man Who Invented Christmas. This is a strange form of biographical comedy-drama that somehow works magnificently (so much so that I just ordered a DVD to be delivered to my dear old mom in England for her to watch over Christmas). It’s about Charles Dickins and his writing of A Christmas Carol. In addition to being an awesome movie suitable for kids of all ages (from 8 to 80+), I learned all sorts of things about Charles himself, such as the fact he spent two years in a workhouse starting at the age of 12 when his father was committed to a debtor’s prison.

The reason I mention all this is that it’s around this time of year I find myself cogitating and ruminating on the technological ghosts of Christmas past, present, and future (and if that’s not a segue to remember, I don’t know what is LOL).

When I talk to younger folks about the technologies we had when I was a kid, they look at me with wide eyes brimming with disbelief. Strangely, the three little girls who live next door exhibited no skepticism whatsoever when I informed them that one of my chores when I was their age was to shoo the dinosaurs out of our family’s cabbage patch (this is obviously a joke because my family never owned a cabbage patch).

I’m a science fiction fan. I remember watching the first episode of Doctor Who, An Unearthly Child, which was transmitted at 5:16 p.m. on Saturday November 23, 1963. I was only 6 years old, but I remember it like it was yesterday (which isn’t saying much because I don’t remember what happened yesterday). The first notes of the theme music informed me that this was a program that would be best watched from the safety found behind the sofa. This was the first electronic music I’d ever heard. Prior to that, every TV program was accompanied by orchestral compositions.

As another aside, I once met Nick Martin who hails from Down Under. Nick was the founder of the EDA company Protel Systems, which evolved into the purveyor of PCB design software, Altium, we know and love today. We were chatting at an Embedded Systems Conference (ESC). It turns out that Nick started watching Doctor Who from behind his parent’s sofa. “Great minds think alike,” as they say. Of course, they also say, “Fools seldom differ,” but I’m sure that doesn’t apply to us.

I’m sorry. What were we talking about? Oh yes, televisions when I was a lad. Ours involved a very small screen in a very large and heavy cabinet that dominated the room, presenting its low-resolution eye candy in glorious black-and-white. If someone at that time had described a future involving 65-in. (and up) flat, high resolution, COLOR televisions in most of the rooms in a house, I would have thought we were talking about a timeframe reminiscent of Buck Rogers in the 25th Century. And yet, here we are.

When I talk to younger engineers about the tools and technologies available when I was starting out, I can see the disbelief in their eyes. I hail from those days of yore when 7400-Series TTL and 4000-Series CMOS devices containing only a few handfuls of logic gates were considered to be state of the art. I also watched as early microprocessors—like the 8080, 6502, and Z80—appeared on the scene, accompanied by single board computers (SBCs) that employed these devices, like the KIM-1, for example. Following its launch in 1976 and throughout the remainder of the 1970s, I drooled with desire whenever I saw an advert in an electronics magazine for a KIM-1, but I was an impoverished student, and this beauty was well beyond my meager means.

I’m tempted to chat about technologies like chiplets, but I wrote about them recently (see Cheeky Chiplets Meet Super NoCs). Things are moving extremely quickly in this arena. Since that column, which dates from only a couple of months ago, a new player—Zero ASIC—has leapt onto the center of the stage with a fanfare of sarrusophones (once heard, never forgotten).

I’m also tempted to talk about technologies like the use of Generative AI (GenAI) for software and hardware design, but I covered these topics a few weeks ago (see Feel the Flux!). At that time, on the hardware front, GenAI was being used only in the context of PCBs. Since then, I’ve seen announcements from EDA companies like Cadence and Siemens talking about using GenAI in the context of ASIC and SoC designs.

Eventually, I decided to stick with something that is close to my heart—microcontroller units (MCUs). I remember the arrival of the 8-bit Intel MCS-51, which is more commonly known as the 8051. Released in 1981, this little rascal has now sold in billions of units. I knew the creator of the 8051’s architecture—John Wharton (RIP). He once told me that, as a young engineer, he used to lunch with one of his supervisors. One day, this supervisor told John of a lunchtime meeting at which free sandwiches were to be served, so they both snuck in. It turned out this was the kick-off meeting for Intel’s latest and greatest microcontroller, whose architecture at that time was undefined. Following that meeting, John returned to his desk and sketched out the architecture that became the Intel 8051.

John passed in 2018. I wish he were still here so I could chat with him about some new MCUs that were recently announced by a company called Alif Semiconductor.

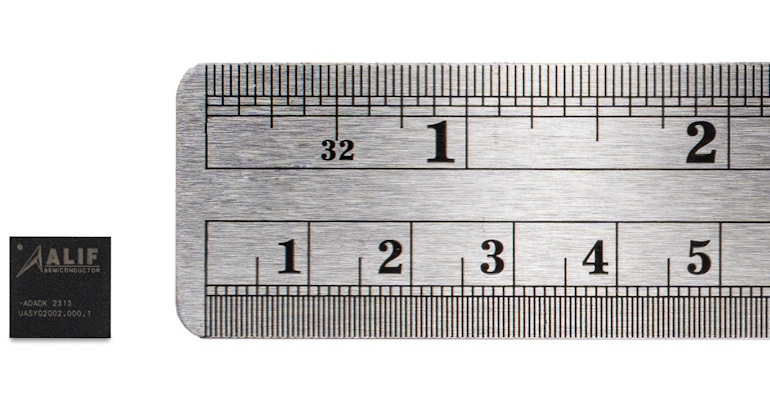

Ensemble MCU (Source: Alif Semiconductor)

Physically, these devices are tiny, but processing-wise… oh my goodness gracious me. The Ensemble family ranges from a single 32-bit processor with an optional micro neural processing unit (microNPU), to four 32-bit processors and two microNPUs. In addition to on-die graphics imaging (GPU, MIPI-DSI, MIPI-CSI) and an on-die secure enclave, these little scamps also feature up to 13.5 MB of on-die SRAM and up to 5.75 MB of on-die NVM (in the form of MRAM).

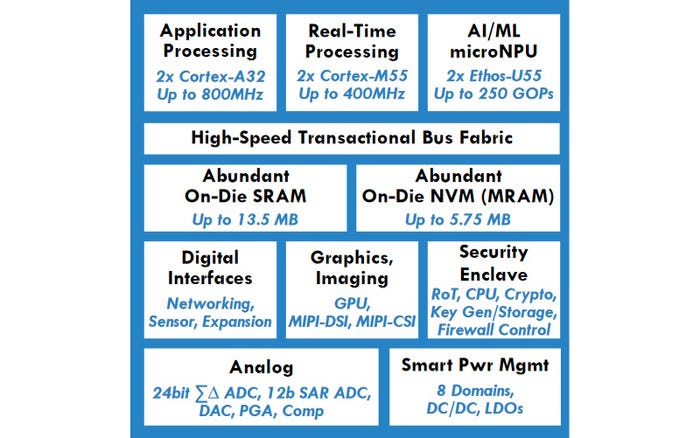

Block diagram of the Ensemble quad-core Fusion processor (Source: Alif)

As compared to traditional MCUs, Ensemble devices are claimed to provide two orders of magnitude more performance while consuming two orders of magnitude less power. What all this means is that these bodacious beauties can be used to perform edge-IoT tasks like object detection, such as working out which portion of an image is a human face, for example. This isn’t the sort of thing you would consider attempting with a traditional MCU, but it can be realized using only a mid-range Ensemble MCU. Furthermore, it can be achieved without the need for external memory, power management, or additional security devices. “Wowzers!” is all I can say.

As I’ve mentioned before, when I designed my first ASIC circa 1980, this was at the 5-micron technology node. Today, some companies like Apple are deploying chips implemented at the 3-nm (three-nanometer) node, TSMC’s forthcoming 2-nm node is expected to appear on the market circa 2025/2026, and the 1-nm node is tentatively scheduled for 2028.

Can you imagine what MCUs will be capable of circa 2030? My head is spinning contemplating the possibilities. I think it’s time for me to take another dried frog pill (or three). What about you? What do you think about all of this?

About the Author(s)

You May Also Like

.jpg?width=300&auto=webp&quality=80&disable=upscale)